Layer 0 - input image

Layer 1 with box-brush option

Layer 1 output - boat

Layer 2 with mask-scroll option

Streamlining Image Editing with

ICCV 2025

Denoising diffusion models have emerged as powerful tools for image manipulation, yet interactive, localized editing workflows remain underdeveloped. We introduce Layered Diffusion Brushes (LDB), a novel training-free framework that enables interactive, layer-based editing using standard diffusion models. LDB defines each "layer" as a self-contained set of parameters guiding the generative process, enabling independent, non-destructive, and fine-grained prompt-guided edits, even in overlapping regions. LDB leverages a unique intermediate latent caching approach to reduce each edit to only a few denoising steps, achieving 140~ms per edit on consumer GPUs. An editor implementing LDB, incorporating familiar layer concepts, was evaluated via user study and quantitative metrics. Results demonstrate LDB's superior speed alongside comparable or improved image quality, background preservation, and edit fidelity relative to state-of-the-art methods across various sequential image manipulation tasks. The findings highlight LDB's ability to significantly enhance creative workflows by providing an intuitive and efficient approach to diffusion-based image editing and its potential for expansion into related subdomains, such as video editing.

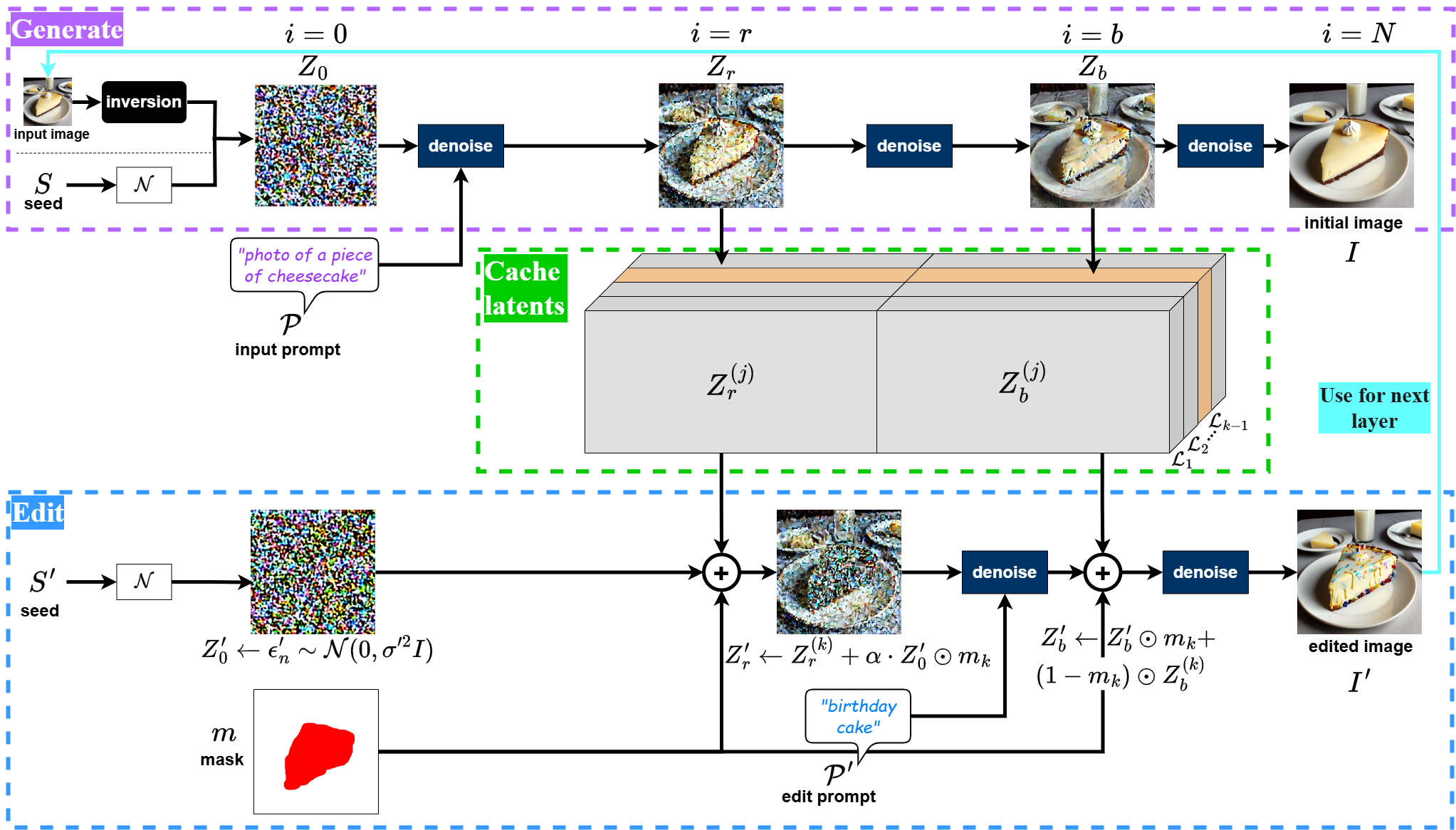

Layered Diffusion Brushes utilize a Latent Diffusion Model-based approach for editing images. Our method is training-free and performs edits through making targeted adjustments to the intermediate latents of the input image during the diffusion process.

During the editing process, the algorithm combines an input new noisy latent 𝑆′ with the original latent from step 𝑛 using a mask 𝑚 and a strength control 𝛼. The denoising procedure then proceeds by applying the edit prompt over several steps. At a specific step 𝑡 (where 𝑡 is fixed as 𝑁 − 2), the original latent and the newly modified latent are merged with the intermediate latent from the previous layer using masking. The final edited image

Our method leverages a highly optimized pipeline combined with intermediate latent caching to achieve real-time performance. Using Layered Diffusion Brushes, a single edit on a 512x512 image can be rendered within 140 ms (up to 7 fps) on a high-end consumer GPU. This capability enables real-time feedback and rapid exploration of candidate edits.

Layered Diffusion Brushes enables powerful image layering and independent editing within each layer. Our editor offers two modes of editing: box mode for brush dragging and custom mask mode for mask scrolling.

Users can stack, hide, unhide, delete, and adjust the strength of layers independently, ensuring global consistency and flexibility in layer modifications regardless of order. This allows for precise and non-destructive editing across different layers.

Scroll Up or Down

input prompt: "photo of paris in fall"

Click on Layer 1 to select

Click on a prompt to edit

Create a layer to start.

(for speed, we use pre-generated images in this demo.)

@InProceedings{Gholami_2025_ICCV,

author = {Gholami, Peyman and Xiao, Robert},

title = {Streamlining Image Editing with Layered Diffusion Brushes},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2025},

pages = {17368-17378}

}